Note

Go to the end to download the full example code

Multi-Response Linear Regression#

Introduction: model setting#

Multi-response linear regression (a.k.a., multi-task learning) aims at predicting multiple responses at the same time, and thus, it is a natural extension for classical linear regression where the response is univariate. Multi-response linear regression (MRLR) is very helpful for the analysis of correlated response such as chemical measurements for soil samples and microRNAs associated with Glioblastoma multiforme cancer. Suppose \(y\) is an \(m\)-dimensional response variable, \(x\) is \(p\)-dimensional predictors, \(B \in R^{m \times p}\) is the coefficient matrix, the MMLR model for the multivariate response is given by

where \(\epsilon\) is an \(m\)-dimensional random noise variable with zero mean.

Due to the Occam's razor principle or the high-dimensionality of predictors, it is meaningful to use a small amount of predictors to conduct multi-task learning. For example, understanding the relationship between gene expression and symptoms of a disease has significant importance in identifying potential markers. Many diseases usually involve multiple manifestations and those manifestations are usually related. In some cases, it makes sense to predict those manifestations using a small but the same set of predictors. The best subset selection problem under the MMLR model is formulated as

where, \(Y \in R^{n \times m}\) and \(X \in R^{n \times p}\) record \(n\) observations` response and predictors, respectively. Here \(\| B \|_{0, 2} = \sum_{i = 1}^{p} I(B_{i\cdot} = {\bf 0})\), where \(B_{i\cdot}\) is the \(i\)-th row of coefficient matrix \(B\) and \({\bf 0} \in R^{m}\) is an all-zero vector.

Simulated Data Example#

We use an artificial dataset to demonstrate how to solve best subset selection problem for MMLR with abess package.

The make_multivariate_glm_data() function provides a simple way to generate suitable dataset for this task.

The synthetic data have 100 observations with 3-dimensional responses and 20-dimensional predictors.

Note that there are three predictors having an impact on the responses.

from abess.datasets import make_multivariate_glm_data

import numpy as np

np.random.seed(0)

n = 100

p = 20

M = 3

k = 3

data = make_multivariate_glm_data(n=n, p=p, M=M, k=k, family='multigaussian')

print(data.y[0:5, ])

print(data.coef_)

print("non-zero: ", set(np.nonzero(data.coef_)[0]))

[[-4.47877355 -2.83660944 9.01903871]

[ 6.89372511 -2.07996131 -4.30416456]

[ 0.98334 -1.85903489 -5.4657045 ]

[ 1.9803706 1.8026655 -6.35946312]

[ 2.0746701 1.97692084 0.26295969]]

[[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0.8880576 2.35738133 0.33938644]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 2.33460874 -3.0222518 -1.63030259]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[-0.25565796 -0.25578781 -3.82878688]

[ 0. 0. 0. ]]

non-zero: {2, 18, 5}

Model Fitting#

To carry out sparse mutli-task learning, we can call the

MultiTaskRegression like:

from abess import MultiTaskRegression

model = MultiTaskRegression()

model.fit(data.x, data.y)

After fitting, model.coef_ contains the predicted coefficients:

print(model.coef_)

print("non-zero: ", set(np.nonzero(model.coef_)[0]))

[[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0.82745448 2.38786974 0.32939017]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 2.42521165 -3.12093333 -1.76555086]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[ 0. 0. 0. ]

[-0.2299864 -0.15746641 -3.69082244]

[ 0. 0. 0. ]]

non-zero: {2, 18, 5}

The outputs show that the support set is correctly identifying and the parameter estimation approaches to the truth.

More on the results#

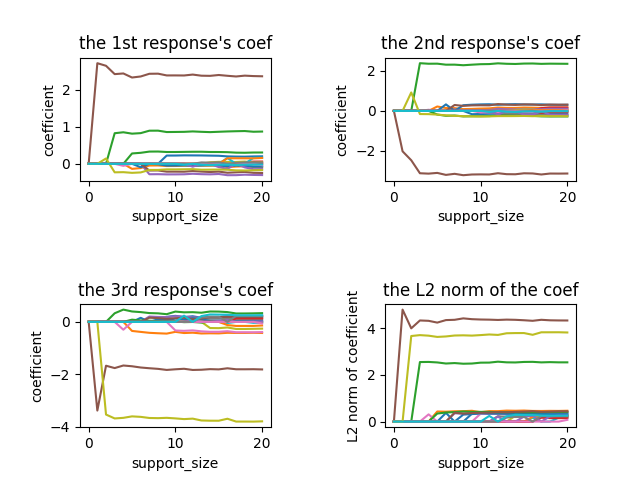

Since there are three responses, we have three solution paths, which correspond to three responses, respectively.

To plot the figure, we can fix the support_size at different levels:

import matplotlib.pyplot as plt

coef = np.zeros((3, 21, 20))

for s in range(21):

model = MultiTaskRegression(support_size=s)

model.fit(data.x, data.y)

for y in range(3):

coef[y, s, :] = model.coef_[:, y]

plt.subplot(2,2,1)

for i in range(20):

plt.plot(coef[0, :, i])

plt.xlabel('support_size')

plt.ylabel('coefficient')

plt.title('the 1st response\'s coef')

plt.subplot(2,2,2)

for i in range(20):

plt.plot(coef[1, :, i])

plt.xlabel('support_size')

plt.ylabel('coefficient')

plt.title('the 2nd response\'s coef')

plt.subplot(2,2,3)

for i in range(20):

plt.plot(coef[2, :, i])

plt.xlabel('support_size')

plt.ylabel('coefficient')

plt.title('the 3rd response\'s coef')

plt.subplot(2,2,4)

coef_norm =np.sum(coef**2, axis = 0)**0.5

for i in range(20):

plt.plot(coef_norm[:, i])

plt.xlabel('support_size')

plt.ylabel('L2 norm of coefficient')

plt.title('the L2 norm of the coef')

plt.subplots_adjust(wspace=0.6,hspace=1)

plt.show()

The abess R package also supports MRLR.

For R tutorial, please view https://abess-team.github.io/abess/articles/v06-MultiTaskLearning.html.

Total running time of the script: (0 minutes 0.277 seconds)